Unleash the power of AI for developers by improving processes across your software delivery organization

Unleash the power of AI for developers by improving processes across your software delivery organization

Trusted by Enterprise Customers

Solutions That Manage The Complexities Of Software Delivery

The World’s Largest Enterprises Depend on Digital.ai to Automate Software Releases

%

of Fortune 100 Companies

57Worldwide Government Entities

57Worldwide Government Entities

57Worldwide Government Entities

57Worldwide Government Entities

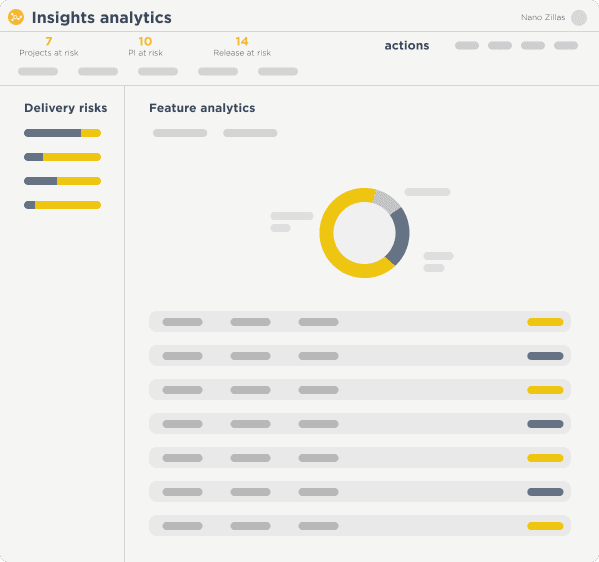

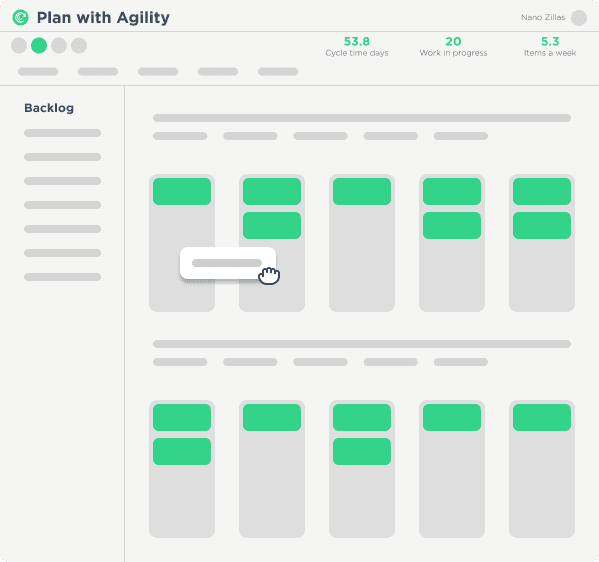

Explore Products Available On Our AI-Powered DevSecOps Platform

Our products help the world’s largest organizations automate software releases, improve mobile application testing and security, and provide insights across the software lifecycle.

How to Make AI-generated code Secure, Private and Compliant

EXPLORE

Related Resources

See our solutions in action

Our team of experts are available to help accelerate your digital transformation